Language Log » Spoken command injection on Google/Siri/Cortana/Alexa

« previous post | next post »

You could have predicted that as soon as speech I/O became a mass-market app, speech-based hacks would appear. And here comes one — Andy Greenberg, "Hackers can silently control Siri from 16 feet away", Wired 10/14/2015:

You could have predicted that as soon as speech I/O became a mass-market app, speech-based hacks would appear. And here comes one — Andy Greenberg, "Hackers can silently control Siri from 16 feet away", Wired 10/14/2015:

SIRI MAY BE your personal assistant. But your voice is not the only one she listens to. As a group of French researchers have discovered, Siri also helpfully obeys the orders of any hacker who talks to her—even, in some cases, one who’s silently transmitting those commands via radio from as far as 16 feet away.

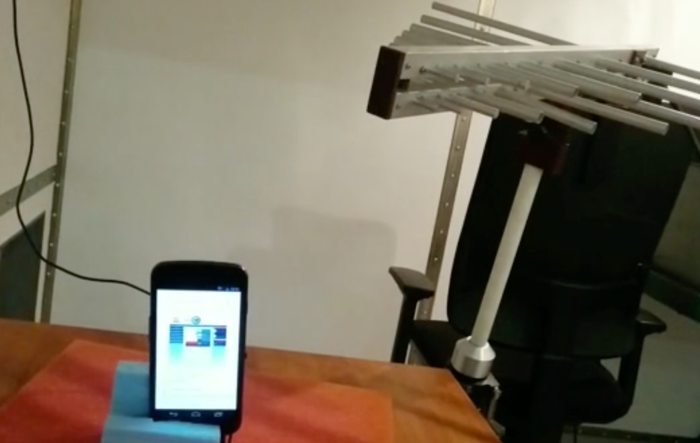

A pair of researchers at ANSSI, a French government agency devoted to information security, have shown that they can use radio waves to silently trigger voice commands on any Android phone or iPhone that has Google Now or Siri enabled, if it also has a pair of headphones with a microphone plugged into its jack. Their clever hack uses those headphones’ cord as an antenna, exploiting its wire to convert surreptitious electromagnetic waves into electrical signals that appear to the phone’s operating system to be audio coming from the user’s microphone. Without speaking a word, a hacker could use that radio attack to tell Siri or Google Now to make calls and send texts, dial the hacker’s number to turn the phone into an eavesdropping device, send the phone’s browser to a malware site, or send spam and phishing messages via email, Facebook, or Twitter.

A technical paper on this method has been published — C. Kasmi & J. Lopes Esteves, "IEMI Threats for Information Security: Remote Command Injection on Modern Smartphones", IEEE Transactions on Electromagnetic Compatibility, 8/13/2015. They seem to have considered a wide variety of practical issues, e.g.

1) Permanent Activation: The voice control command has been activated by default by the user. This means that the voice command service starts as soon as a keyword is pronounced by the user. The experiments demonstrated that it is possible to trigger voice commands remotely by emitting an AM-modulated signal containing the keyword followed by some voice commands at 103 MHz (this frequency is given as an example as it is related to a specific model). The resulting electric signal induced in the microphone cable of the headphones is correctly interpreted by the voice command interface.

2) User Activation: The voice command is not activated by default and a long hardware button press is required for launching the service. In this case, we have worked on injecting a specially crafted radio signal to trigger the activation of the voice command interpreter by emulating a headphones command button press. It was shown that, thanks to a FM modulated signal at the same emitted frequency, we were able to launch the voice command service and to inject the voice command.

3) Discussion: It was also observed that the minimal field required around the target was in the range of 25–30 V/m at 103 MHz, which is close to the limit accepted for human safety but higher than the required immunity level of the device (3 V/m). Thus, smartphones could be disturbed by the parasitic field. Nevertheless, no collateral effects have been encountered during our experiments. Moreover, depending on the cable arrangement and the cable length (between 1 and 1.20 m), it has been observed that the efficient frequency leading to command execution varies in the 80–108 MHz range.

They also discuss some simple hardware and software changes for defeating such attacks.

Meanwhile, I wonder whether any (text or video) thrillers have picked up on this idea?

October 18, 2015 @ 12:44 pm · Filed by Mark Liberman under HLT