Guccifer 2.0 NGP/VAN Metadata Analysis – The Forensicator

Acknowledgements

Thanks go out to Elizabeth Vos at Disobedient Media who was the first to report on this analysis; her article can be read here. Thanks also to Adam Carter who maintains the g-2.space web site — the one stop shop for information that relates to Guccifer 2.0. You can reach Elizabeth and Adam on Twitter.

Terminology

The abbreviation, “MB/s”, used below refers to “Mega Bytes per Second”. This should not be confused with the abbreviation “Mb/s” or “Mbps” which refer to “Mega Bits per Second”; these later abbreviations are often used by Internet Service Providers (ISP’s) to express the transfer speeds available on their networks. As a rule of thumb, divide Mb/s by 8 to arrive at an equivalent rate expressed in MB/s.

Updates

2017-08-24: Three companion blog posts have been added:

Below, an addendum has been added to provide context for the Internet transfer speed statements made in the “Findings” section and Conclusion 7.

2017-08-02: The section starting at Conclusion 7, which discusses the calculated 23 MB/s transfer rate has been augmented to refer to a recently added blog entry, The Need for Speed, which documents test results for measured transfer rates for various media types and Internet topologies.

Overview

This study analyzes the file metadata found in a 7zip archive file, 7dc58-ngp-van.7z, attributed to the Guccifer 2.0 persona. For an in depth analysis of various aspects of the controversy surrounding Guccifer 2.0, refer to Adam Carter’s blog, Guccifer 2.0: Game Over.

Addendum

When Forensicator began his review of the metadata in the NGP VAN 7zip file disclosed by Guccifer 2, he had a simple impression of how Guccifer 2 operated, based upon Guccifer 2’s own statements and observations made by a security firm called ThreatConnect. Forensicator viewed Guccifer 2 as a lone wolf hacker who lived somewhere in Eastern Europe or Russia; he used a Russian-aligned VPN service to mask his IP address.

Forensicator’s assumptions regarding Guccifer were not clearly stated, and this led to some confusion and controversy regarding claims in the report related to achievable transfer speeds over the Internet. Further, as the review process proceeded, alternative theories were suggested; they placed additional pressure on the Internet transfer speed claims and raised some additional interesting questions.

Forensicator’s initial view of Guccifer 2 fed into this statement in the Findings section below: “Due to the estimated speed of transfer (23 MB/s) calculated in this study, it is unlikely that this initial data transfer could have been done remotely over the Internet.” That finding came under fire, partly because when taken out of context, it was counter to many people’s experience.

Hopefully, with the context now more clearly stated, the Internet transfer speed claims will make more sense.

Findings

Based on the analysis that is detailed below, the following key findings are presented:

- On 7/5/2016 at approximately 6:45 PM Eastern time, someone copied the data that eventually appears on the “NGP VAN” 7zip file (the subject of this analysis). This 7zip file was published by a persona named Guccifer 2, two months later on September 13, 2016.

- Due to the estimated speed of transfer (23 MB/s) calculated in this study, it is unlikely that this initial data transfer could have been done remotely over the Internet.

- The initial copying activity was likely done from a computer system that had direct access to the data. By “direct access” we mean that the individual who was collecting the data either had physical access to the computer where the data was stored, or the data was copied over a local high speed network (LAN).

- They may have copied a much larger collection of data than the data present in the NGP VAN 7zip. This larger collection of data may have been as large as 19 GB. In that scenario the NGP VAN 7zip file represents only 1/10th of the total amount of material taken.

- This initial copying activity was done on a system where Eastern Daylight Time (EDT) settings were in force. Most likely, the computer used to initially copy the data was located somewhere on the East Coast.

- The data was likely initially copied to a computer running Linux, because the file last modified times all reflect the apparent time of the copy and this is a characteristic of the the Linux ‘cp’ command (using default options).

- A Linux OS may have been booted from a USB flash drive and the data may have been copied back to the same flash drive, which will likely have been formatted with the Linux (ext4) file system.

- On September 1, 2016, two months after copying the initial large collection of (alleged) DNC related content (the so-called NGP/VAN data), a subset was transferred to working directories on a system running Windows. The .rar files included in the final 7zip file were built from those working directories.

- The computer system where the working directories were built had Eastern Daylight Time (EDT) settings in force. Most likely, this system was located somewhere on the East Coast.

- The .rar files and plain files that eventually end up in the “NGP VAN” 7zip file disclosed by Guccifer 2.0 on 9/13/2016 were likely first copied to a USB flash drive, which served as the source data for the final 7zip file. There is no information to determine when or where the final 7zip file was built.

Analysis

The Guccifer 2 “NGP VAN” files are found in a password protected 7zip file; instructions for downloading this 7zip file can be found at https://pastebin.com/fN9uvUE0.

Technical note: the size of the 7zip file is 711,396,436 bytes and the MD5 sum is: a6ca56d03073ce6377922171fc8b232d.

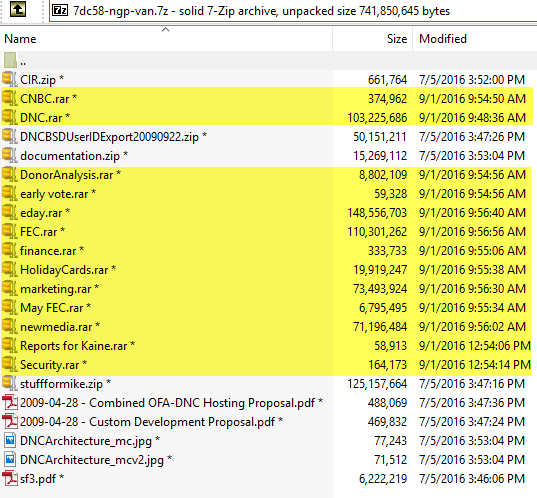

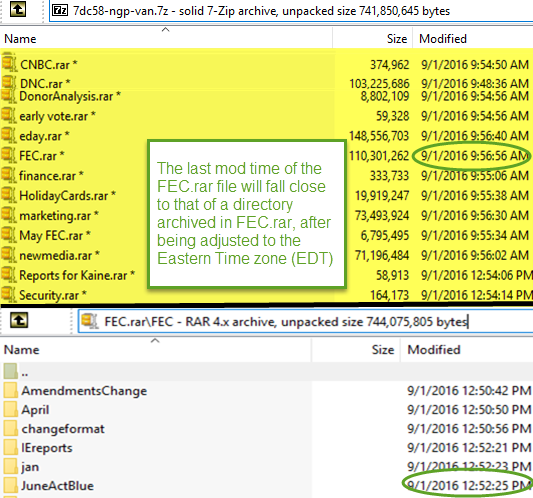

This .7z file contains several .rar files – one for each top-level directory, as shown below.

The times shown above are in Pacific Daylight Savings Time (PDT). The embedded .rar files are highlighted in yellow. The “*” after each file indicates that the file is password encrypted. This display of the file entries is shown when the .7z file is opened. A password is required to extract the constituent files. This aspect of the .7z file likely motivated zipping the sub-directories (e.g. CNBC and DNC) into .rar files; this effectively hides the structure of the sub-directories, unless the password is provided and the sub-directories are then extracted. The last modification dates indicate that the .rar files were built on 9/1/2016 and all the other files were copied on 7/5/2016. Note that all the times are even (accurate only to the nearest 2 seconds); the significance of this property will be discussed near the end of this analysis. The files copied on 7/5/2016 have last modified times that are closely clustered around 3:50 PM (PDT); the significance of those times will be described below.

The Guccifer 2 “NGP/VAN” file structure is populated by opening the .7z file and then extracting the top-level files inclusive of the .rar files. The .rar files are further unpacked (using WinRAR) into directories with a name derived by dropping the .rar suffix.

Note: although other archive programs claim to handle .rar files, only WinRAR will reliably restore the archived files, inclusive of their sub-microsecond last modification times.

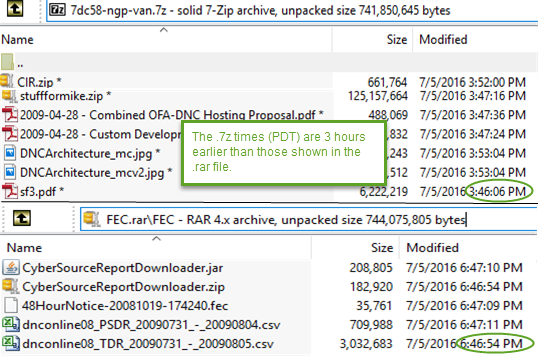

The times recorded in those .rar files are local (relative) times; this determination is detailed in the blog post, RAR Times: Local or UTC? . The times recorded in the .7z file are absolute (UTC) times. If you look at the recorded .rar file times, you will see times like “7/5/2016 6:39:18 PM” and the times in the .7z file will be at some offset to that depending on your time zone. For example, if you are in the Pacific (daylight savings) time zone, the files shown in the .7z file will read 3 hours earlier than those shown in the .rar files, as shown below.

In this case, we need to adjust the .7z file times to reflect Eastern Time. Something like this command if you are on the West Coast (using Cygwin) will make the adjustment.

find . -exec touch -m -r {} -d '+3 hour' {} \; The .rar files can be unpacked normally because they will appear with the same times as shown in the archive.

Conclusion 1: The DNC files were first copied to a system which had Eastern Time settings in effect; therefore, this system was likely located on the East Coast. This conclusion is supported by the observation that the .7z file times, after adjustment to East Coast time fall into the range of the file times recorded in the .rar files.

Next, we generate (for example, using a Cygwin bash prompt) a tab-separated list of files sorted by last modified date.

echo -e 'Top\tPath\tFile\tLast Mod\tSize' > ../guc2-files.txt # Truncate the 10 fractional digits in last mod time to 3. # Excel won't print more than 3 fractional digits anyway. find * -type f -printf '%h\t%f\t%TF %TT\t%s\n' | \ sort -t$'\t' -k3 -k1 -k2 | \ perl -F'/\t/' -lane '$F[2] =~ s/(?<=\.\d{3})\d+$//; ($top=$F[0])=~s{/.*}{}; print join ("\t", $top, @F);' \ >> ../guc2-files.txt

This file is then imported into an Excel spreadsheet for analysis.

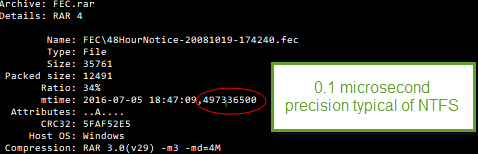

Many archive file formats (e.g., zip and 7zip) record file times only to whole second resolution. The .rar format however records file times to a higher (nanosecond) resolution. This can be difficult to confirm; the GUI interface will only display whole seconds. The included command line utility, rar, however can be used to display the sub-second resolution. The lt (“list technical”) command will provide further detail. For example, the following command will list additional detail on the file 48HourNotice-20081019-174240.fec in the FEC.rar archive.

"c:\Program Files\WinRAR\rar" lt FEC.rar FEC\48HourNotice-20081019-174240.fec

Conclusion 2: The DNC files were first copied to a file system that was formatted either as an NTFS file system (typically used on Windows systems) or to a Linux (ext4) file system. This conclusion is supported by the observation that the .rar file(s) show file last modified times (mtime) with 7 significant decimal digits (0.1 microsecond resolution) ; this is a characteristic of NTFS file systems.

It is possible that the DNC files were initially copied to a system running Linux and then later copied to a Windows system. Modern Linux implementations use the ext4 file system by default; ext4 records file times in nanoseconds. Thus, if the files were initially copied to a Linux ext4 file system and subsequently copied to a Windows NTFS file system the file times would have been preserved with the 0.1 microsecond precision shown above.

We observe that the last modified times are clustered together in a 14 minute time period on 2016-07-05. If the DNC files were copied in the usual way to a computer running a Windows operating system (e.g., using drag-and-drop in the File Explorer) the last modified times would typically not change (from the original) — the create time would change instead (to the time of the copy). The Windows File Explorer will typically only show the last modified time (by default). A file copy operation using the ‘cp’ command line utility commonly found on a UNIX system (e.g., Linux and Mac OS X) however will (by default) change the last modified time to the time that the copied file was written.

Conclusion 3: The DNC files may have been copied using the ‘cp‘ command (which is available on Linux, Windows, and Mac OS X in some form). This (tentative) conclusion is supported by the observation that all of the file last modified times were changed to the apparent time of the copy operation. Other scenarios may produce this pattern of last modified times, but none were immediately apparent to this author at the time that this article was published.

The combination of an NTFS file system and the use of the UNIX ‘cp’ command is sufficiently unusual to encourage a further search for a more plausible scenario/explanation. One scenario that fits the facts would be that the DNC files were initially copied to a system running Linux (e.g., Ubuntu). The system might have had Linux installed on the system’s hard drive, or Linux may have been booted from a USB flash drive; bootable flash drive images with Linux installed on them are widely available.

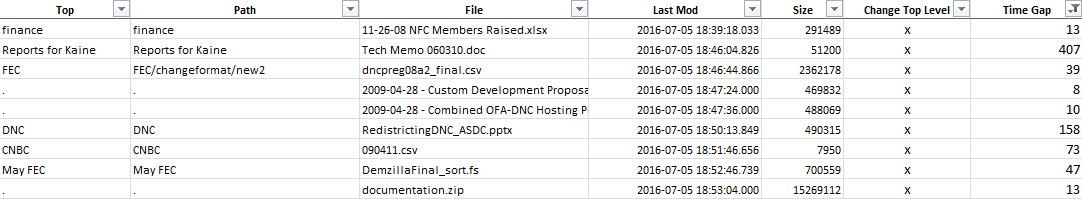

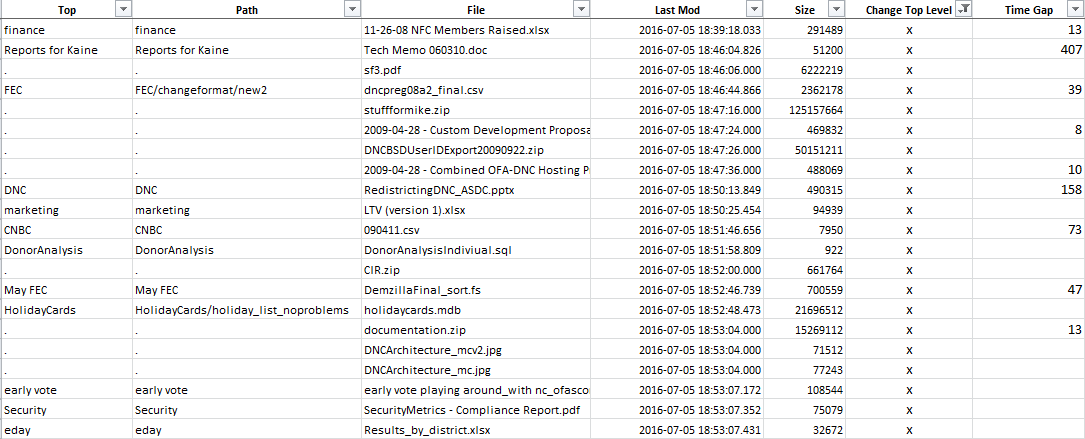

A review of the DNC file metadata leads to the observation that significant “time gaps” appear between various top-level directories and files. In the spreadsheet, we first mark (with x’s) places where the top-level directory name changes or the top-level directory is the root (“.”) directory. We further calculate the “time gap” which is the difference between the last mod. time of a current entry and its previous entry; from this we subtract an approximation of the transfer time (using our knowledge of average transfer speed) to go from the last mod time to a likely time that the transfer started. We use a cut off of at least 3 seconds to filter out anomalies due to normal network/OS variations. Here are the entries with significant time gaps.

Conclusion 4: The overall time to obtain the DNC files found in the 7z file was 14 minutes; a significant part of that time (13 minutes) is allocated to time gaps that appear between several of the top-level files and directories.

Note that significant time gaps always occur at top-level directory changes (only x’s in the “Top-Level Changed” column). To put the time gaps in better perspective, here is a listing of all top-level changes. A top-level change is defined as either a change in the top-level directory name, or when the parent directory of a file is the root (“.”) directory.

In the “Last Mod” ordered list above, top-level directories and files are intermixed, and they are not in alphabetic order. This pattern can be explained by the use of the UNIX cp command. The cp command copies files in “directory order”, which on many systems is unsorted and will appear to be somewhat random.

The time gaps that appear in the chronologically ordered listing above could indicate that these files and directories were chosen from a much larger collection of copied files; this larger collection of files may have first been copied en masse via the ‘cp -r’ command.

Initially when this data was analyzed, the “time gaps” were attributed to “think time”, where it was assumed that the individual who collected the files would copy the files in small batches and in between each batch would need some “think time” to find or decide on the next batch to copy. This may be an equally valid way to explain the presence of time gaps at various junctures in the top-level files and folders. However, in this analysis we will assume that a much larger collection of files were initially copied on 7/5/2016; the files in the final .7z file (the subject of this analysis) represent only a small percentage of all the files that were initially collected.

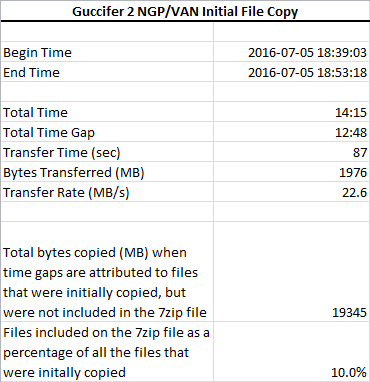

We can estimate the transfer speed of the copy by dividing the total number of bytes transferred by the transfer time. The transfer time is approximated by subtracting the time gap total from the total elapsed time of the copy session. This calculation is shown below.

Conclusion 5: The lengthy time gaps suggest that many additional files were initially copied en masse and that only a small subset of that collection was selected for inclusion into the final 7zip archive file (that was subsequently published by Guccifer 2).

Given the calculations above, if 1.98 GB were copied at a rate of 22.6 MB/s and all the time gaps were attributed to additional file copying then approximately 19.3 GB in total were initially copied. In this hypothetical scenario, the 7zip archive represents only about 10% of the total amount of data that was initially collected.

Conclusion 6: The initial DNC file collection activity began at approximately 2016-07-05 18:39:02 EDT and ended at 2016-07-05 18:53:17 EDT. This conclusion is supported by the observed last modified times and the earlier conclusion that the ex-filtrated files were copied to a computer located in the Eastern Time zone.

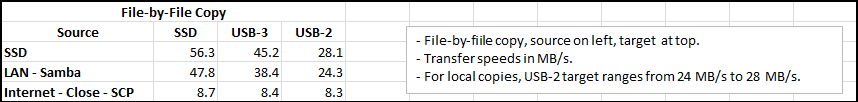

Conclusion 7. A transfer rate of 23 MB/s is estimated for this initial file collection operation. This transfer rate can be achieved when files are copied over a LAN or when copying directly from the host computer’s hard drive. This rate is too fast to support the hypothesis that the DNC data was initially copied over the Internet (esp. to Romania).

This transfer rate (23 MB/s) is typically seen when copying local data to a fairly slow (USB-2) thumb drive.

To get a sense of where this 23MB/s (23 Mega Bytes per Second) rate falls in the range of supported speeds for various network and media storage technologies, consult the blog entry titled The Need for Speed. That blog entry describes test results which support the conclusions and observations noted above. Below, is one table from from that report.

We can see that the estimated 23 MB/s transfer rate is in the same range as the local copy transfer rates shown above.

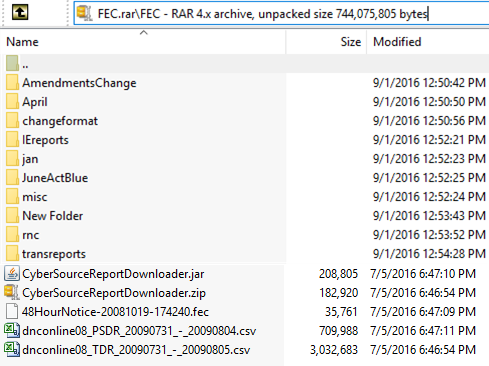

We turn our attention to the .rar files embedded in the .7z file. Here is an illustrative example (FEC.rar).

From this listing of FEC.rar above, we make the following observations:

- The directories were last modified on 9/1/2016 at approximately 12:50 PM.

- The file entries are shown with last modified times on

7/5/2016 at approximately 6:45 PM. - We note that the last modification date for FEC.rar is 12:56:56 PM, EDT on 9/1/2016 after adjusting this time stamp to the Eastern Time zone.

- As mentioned earlier, the seconds part of the file and directory last modified times are recorded in the .rar file with a 0.1 microsecond resolution, which is typical of an NTFS file system.

- The file last modified times maintain the 7/15/2016 date – they were not changed to dates and times on 9/1/2016 when the sub-directories were created.

- When WinRAR restored the .rar files it preserved the directory last modification times recorded in the .rar file.

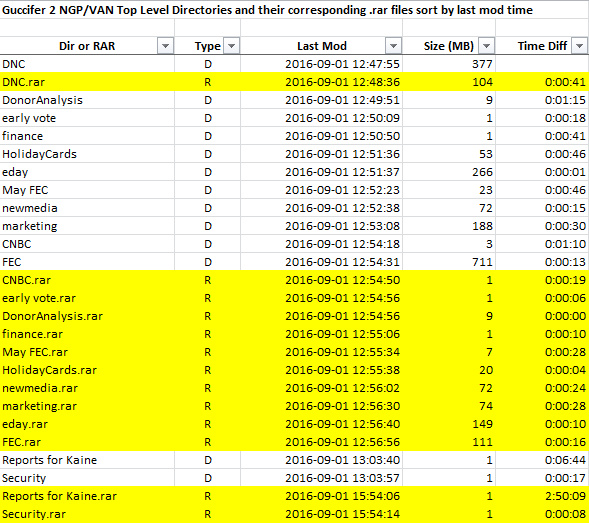

Recall that the NGP/VAN 7zip file has several .rar files which unpack into top-level directories. We can correlate the last modified times of the .rar files with the last modified times of the directories saved in the .rar files as shown below.

Conclusion 8: The .rar files that ultimately are included in the NGP/VAN 7zip file were built on a computer system where the Eastern Daylight Savings Time (EDT) time zone setting was in force. This conclusion is supported by the observation that if the .rar last modified times are adjusted to EDT they fall into the same range as the last modified times for the directories archived in the .rar files.

In the following table, we combine the last modified times of the directories restored from the .rar files with the last modified times of the .rar files themselves and sort by last modified time. The .rar file last modified times shown have been adjusted so that they reflect Eastern Daylight Savings Time (EDT).

From this data, we make the following observations.

- On 9/1/2016, some of the directories that were initially collected on 7/5/2016 were copied to working directories. The working directories were zipped into .rar files that were added to the 7zip file that is the subject of this analysis.

- The working directories likely reside on an NTFS-formatted file system (they have last modified times with 0.1 microsecond resolution – typical of NTFS). The NTFS file system is commonly used in modern Windows operating system installations.

- The DNC directory was the first directory copied to its working directory.

- Shortly after that, “DNC.rar” was created.

- A series of directories (“DonorAnalysis” through “FEC”) were copied to their working directories.

- Shortly after that, the .rar files were created from those working directories.

- Approximately 7 minutes later, two more directories were copied to their working directories: “Reports for Kaine” and “Security“.

- Finally, almost 3 hours after that, the .rar files were created from those two working directories

- We notice no obvious pattern in the order of choosing the directories to copy to their working copies nor in the creation of .rar files.

- The varying order of copying directories to their working copies and the observation that they may have been copied in at least three separate batches adds support to the theory that these directories were selected from a larger collection of files and directories that was initially collected on 7/5/2016.

Given the lack of uniformity in the metadata, we decided not to try to estimate the transfer times for the copy operations which copied the source directories to their working copies.

A question that comes up at this point is: Why were the directories first copied to a working directory before zipping them into .rar files? Two alternative explanations are offered:

- The larger collection of files that were copied on 7/5/2016 reside on a computer running the Linux operating system. They need to be copied to a Windows operating system if programs like WinRAR (which runs only on Windows) are going to be used. In this case they may have been exported from the Linux system via a Samba network share.

- Or, the larger collection of files that were copied on 7/5/2016 is some distance away from the system where the .rar files will be built; they need to be copied over the Internet. It makes sense to transfer only the directories that have been selected for inclusion into the 7zip file, because copying the entire collection over the Internet may be relatively slow.

Neither of the alternatives above suggest that making local working copies was truly necessary, because if we hypothesize that the original collection was exported via a network share, it would seem that this share could have been used directly. That may not have occurred to the person building the .rar files, or perhaps they had some other motivation to make local copies. One idea might be that the local working copies were made to facilitate some internal pruning, but we so no evidence of that — the time gaps occur only at the top level (as discussed earlier).

Conclusion 9: The final copy (on 9/1/2016) from the initial file collection to working directories was likely done with a conventional drag-and-drop style of copy. This conclusion is based on the observation that the file last modified times were preserved when copying from the initial collection to the working copies, unlike the first copy operation on 7/5/2016 (which is attributed to the use of the cp command).

Conclusion 10: The final working directories were likely created on an NTFS file system present on a computer running Windows. This conclusion is based on the following observations: (1) the file timestamps have 0.1 micro-second resolution (a characteristic of NTFS file systems), (2) NTFS file systems are widely used on Windows systems, (3) NTFS file systems are typically not used on USB flash drives, and (4) WinRAR is a Windows based program and was likely used to build the .rar files.

At the beginning of this analysis, we noted that the seconds part of the file last modified times that appear at the top-level in the .7z file were all even (a multiple of 2). Windows FAT formatted volumes are constrained to represent times only to the nearest two (2) seconds. USB flash drives are typically formatted FAT or FAT32.

Conclusion 11: The .rar files and plain files that were combined into the final .7z file (the subject of this analysis) were likely copied to a FAT-formatted flash drive first. This conclusion is supported by the observation that the seconds part of all the last modified times are all exact multiples of 2.