Google Developers Blog: Firebase

Posted by Brahim Elbouchikhi, Director of Product Management and Matej Pfajfar, Engineering Director

We launched ML Kit at I/O last year with the mission to simplify Machine Learning for everyone. We couldn’t be happier about the experiences that ML Kit has enabled thousands of developers to create. And more importantly, user engagement with features powered by ML Kit is growing more than 60% per month. Below is a small sample of apps we have been working with.

But there is a lot more. At I/O this year, we are excited to introduce four new features.

The Object Detection and Tracking API lets you identify the prominent object in an image and then track it in real-time. You can pair this API with a cloud solution (e.g. Google Cloud’s Product Search API) to create a real-time visual search experience.

When you pass an image or video stream to the API, it will return the coordinates of the primary object as well as a coarse classification. The API then provides a handle for tracking this object's coordinates over time.

A number of partners have built experiences that are powered by this API already. For example, Adidas built a visual search experience right into their app.

The On-device Translation API allows you to use the same offline models that support Google Translate to provide fast, dynamic translation of text in your app into 58 languages. This API operates entirely on-device so the context of the translated text never leaves the device.

You can use this API to enable users to communicate with others who don't understand their language or translate user-generated content.

To the right, we demonstrate the use of ML Kit’s text recognition, language detection, and translation APIs in one experience.

We also collaborated with the Material Design team to produce a set of design patterns for integrating ML into your apps. We are open sourcing implementations of these patterns and hope that they will further accelerate your adoption of ML Kit and AI more broadly.

Our design patterns for machine learning powered features will be available on the Material.io site.

With AutoML Vision Edge, you can easily create custom image classification models tailored to your needs. For example, you may want your app to be able to identify different types of food, or distinguish between species of animals. Whatever your need, just upload your training data to the Firebase console and you can use Google’s AutoML technology to build a custom TensorFlow Lite model for you to run locally on your user's device. And if you find that collecting training datasets is hard, you can use our open source app which makes the process simpler and more collaborative.

Wrapping up

We are excited by this first year and really hope that our progress will inspire you to get started with Machine Learning. Please head over to g.co/mlkit to learn more or visit Firebase to get started right away.

Posted by Brahim Elbouchikhi, Director of Product Management and Matej Pfajfar, Engineering Director

We launched ML Kit at I/O last year with the mission to simplify Machine Learning for everyone. We couldn’t be happier about the experiences that ML Kit has enabled thousands of developers to create. And more importantly, user engagement with features powered by ML Kit is growing more than 60% per month. Below is a small sample of apps we have been working with.

But there is a lot more. At I/O this year, we are excited to introduce four new features.

The Object Detection and Tracking API lets you identify the prominent object in an image and then track it in real-time. You can pair this API with a cloud solution (e.g. Google Cloud’s Product Search API) to create a real-time visual search experience.

When you pass an image or video stream to the API, it will return the coordinates of the primary object as well as a coarse classification. The API then provides a handle for tracking this object's coordinates over time.

A number of partners have built experiences that are powered by this API already. For example, Adidas built a visual search experience right into their app.

The On-device Translation API allows you to use the same offline models that support Google Translate to provide fast, dynamic translation of text in your app into 58 languages. This API operates entirely on-device so the context of the translated text never leaves the device.

You can use this API to enable users to communicate with others who don't understand their language or translate user-generated content.

To the right, we demonstrate the use of ML Kit’s text recognition, language detection, and translation APIs in one experience.

We also collaborated with the Material Design team to produce a set of design patterns for integrating ML into your apps. We are open sourcing implementations of these patterns and hope that they will further accelerate your adoption of ML Kit and AI more broadly.

Our design patterns for machine learning powered features will be available on the Material.io site.

With AutoML Vision Edge, you can easily create custom image classification models tailored to your needs. For example, you may want your app to be able to identify different types of food, or distinguish between species of animals. Whatever your need, just upload your training data to the Firebase console and you can use Google’s AutoML technology to build a custom TensorFlow Lite model for you to run locally on your user's device. And if you find that collecting training datasets is hard, you can use our open source app which makes the process simpler and more collaborative.

Wrapping up

We are excited by this first year and really hope that our progress will inspire you to get started with Machine Learning. Please head over to g.co/mlkit to learn more or visit Firebase to get started right away.

Posted by Mertcan Mermerkaya, Software Engineer

We have great news for web developers that use Firebase Cloud Messaging to send notifications to clients! The FCM v1 REST API has integrated fully with the Web Notifications API. This integration allows you to set icons, images, actions and more for your Web notifications from your server! Better yet, as the Web Notifications API continues to grow and change, these options will be immediately available to you. You won't have to wait for an update to FCM to support them!

. This integration allows you to set icons, images, actions and more for your Web notifications from your server! Better yet, as the Web Notifications API continues to grow and change, these options will be immediately available to you. You won't have to wait for an update to FCM to support them!

Below is a sample payload you can send to your web clients on Push API supported browsers

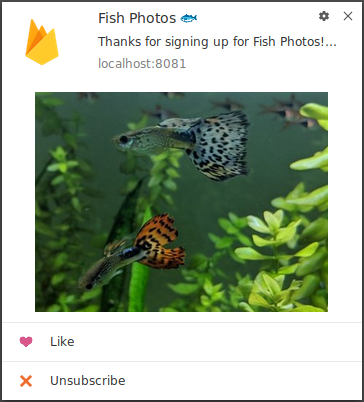

Notice that you are able to set new parameters, such as actions, which gives the user different ways to interact with the notification. In the example below, users have the option to choose from actions to like the photo or to unsubscribe.

To handle action clicks in your app, you need to add an event listener in the default firebase-messaging-sw.js file (or your custom service worker). If an action button was clicked, event.action will contain the string that identifies the clicked action. Here's how to handle the "like" and "unsubscribe" events on the client:

// Retrieve an instance of Firebase Messaging so that it can handle background messages.

const messaging = firebase.messaging();

// Add an event listener to handle notification clicks

self.addEventListener('notificationclick', function(event) {

if (event.action === 'like') {

// Like button was clicked

const photoId = event.notification.data.photoId;

like(photoId);

}

else if (event.action === 'unsubscribe') {

// Unsubscribe button was clicked

const notificationType = event.notification.data.notificationType;

unsubscribe(notificationType);

}

event.notification.close();

});

The SDK will still handle regular notification clicks and redirect the user to your click_action link if provided. To see more on how to handle click actions on the client, check out the guide.

Since different browsers support different parameters in different platforms, it's important to check out the browser compatibility documentation to ensure your notifications work as intended. Want to learn more about what the Send API can do? Check out the FCM Send API documentation and the Web Notifications API documentation. If you're using the FCM Send API and you incorporate the Web Notifications API in a cool way, then let us know! Find Firebase on Twitter at @Firebase, and Facebook and Google+ by searching "Firebase".

Posted by Brahim Elbouchikhi, Product Manager

In today's fast-moving world, people have come to expect mobile apps to be intelligent - adapting to users' activity or delighting them with surprising smarts. As a result, we think machine learning will become an essential tool in mobile development. That's why on Tuesday at Google I/O, we introduced ML Kit in beta: a new SDK that brings Google's machine learning expertise to mobile developers in a powerful, yet easy-to-use package on Firebase. We couldn't be more excited!

Getting started with machine learning can be difficult for many developers. Typically, new ML developers spend countless hours learning the intricacies of implementing low-level models, using frameworks, and more. Even for the seasoned expert, adapting and optimizing models to run on mobile devices can be a huge undertaking. Beyond the machine learning complexities, sourcing training data can be an expensive and time consuming process, especially when considering a global audience.

With ML Kit, you can use machine learning to build compelling features, on Android and iOS, regardless of your machine learning expertise. More details below!

If you're a beginner who just wants to get the ball rolling, ML Kit gives you five ready-to-use ("base") APIs that address common mobile use cases:

With these base APIs, you simply pass in data to ML Kit and get back an intuitive response. For example: Lose It!, one of our early users, used ML Kit to build several features in the latest version of their calorie tracker app. Using our text recognition based API and a custom built model, their app can quickly capture nutrition information from product labels to input a food's content from an image.

ML Kit gives you both on-device and Cloud APIs, all in a common and simple interface, allowing you to choose the ones that fit your requirements best. The on-device APIs process data quickly and will work even when there's no network connection, while the cloud-based APIs leverage the power of Google Cloud Platform's machine learning technology to give a higher level of accuracy.

See these APIs in action on your Firebase console:

Heads up: We're planning to release two more APIs in the coming months. First is a smart reply API allowing you to support contextual messaging replies in your app, and the second is a high density face contour addition to the face detection API. Sign up here to give them a try!

If you're seasoned in machine learning and you don't find a base API that covers your use case, ML Kit lets you deploy your own TensorFlow Lite models. You simply upload them via the Firebase console, and we'll take care of hosting and serving them to your app's users. This way you can keep your models out of your APK/bundles which reduces your app install size. Also, because ML Kit serves your model dynamically, you can always update your model without having to re-publish your apps.

But there is more. As apps have grown to do more, their size has increased, harming app store install rates, and with the potential to cost users more in data overages. Machine learning can further exacerbate this trend since models can reach 10's of megabytes in size. So we decided to invest in model compression. Specifically, we are experimenting with a feature that allows you to upload a full TensorFlow model, along with training data, and receive in return a compressed TensorFlow Lite model. The technology behind this is evolving rapidly and so we are looking for a few developers to try it and give us feedback. If you are interested, please sign up here.

Since ML Kit is available through Firebase, it's easy for you to take advantage of the broader Firebase platform. For example, Remote Config and A/B testing lets you experiment with multiple custom models. You can dynamically switch values in your app, making it a great fit to swap the custom models you want your users to use on the fly. You can even create population segments and experiment with several models in parallel.

Other examples include:

We can't wait to see what you'll build with ML Kit. We hope you'll love the product like many of our early customers:

Get started with the ML Kit beta by visiting your Firebase console today. If you have any thoughts or feedback, feel free to let us know - we're always listening!

Posted by Brahim Elbouchikhi, Product Manager

In today's fast-moving world, people have come to expect mobile apps to be intelligent - adapting to users' activity or delighting them with surprising smarts. As a result, we think machine learning will become an essential tool in mobile development. That's why on Tuesday at Google I/O, we introduced ML Kit in beta: a new SDK that brings Google's machine learning expertise to mobile developers in a powerful, yet easy-to-use package on Firebase. We couldn't be more excited!

Getting started with machine learning can be difficult for many developers. Typically, new ML developers spend countless hours learning the intricacies of implementing low-level models, using frameworks, and more. Even for the seasoned expert, adapting and optimizing models to run on mobile devices can be a huge undertaking. Beyond the machine learning complexities, sourcing training data can be an expensive and time consuming process, especially when considering a global audience.

With ML Kit, you can use machine learning to build compelling features, on Android and iOS, regardless of your machine learning expertise. More details below!

If you're a beginner who just wants to get the ball rolling, ML Kit gives you five ready-to-use ("base") APIs that address common mobile use cases:

With these base APIs, you simply pass in data to ML Kit and get back an intuitive response. For example: Lose It!, one of our early users, used ML Kit to build several features in the latest version of their calorie tracker app. Using our text recognition based API and a custom built model, their app can quickly capture nutrition information from product labels to input a food's content from an image.

ML Kit gives you both on-device and Cloud APIs, all in a common and simple interface, allowing you to choose the ones that fit your requirements best. The on-device APIs process data quickly and will work even when there's no network connection, while the cloud-based APIs leverage the power of Google Cloud Platform's machine learning technology to give a higher level of accuracy.

See these APIs in action on your Firebase console:

Heads up: We're planning to release two more APIs in the coming months. First is a smart reply API allowing you to support contextual messaging replies in your app, and the second is a high density face contour addition to the face detection API. Sign up here to give them a try!

If you're seasoned in machine learning and you don't find a base API that covers your use case, ML Kit lets you deploy your own TensorFlow Lite models. You simply upload them via the Firebase console, and we'll take care of hosting and serving them to your app's users. This way you can keep your models out of your APK/bundles which reduces your app install size. Also, because ML Kit serves your model dynamically, you can always update your model without having to re-publish your apps.

But there is more. As apps have grown to do more, their size has increased, harming app store install rates, and with the potential to cost users more in data overages. Machine learning can further exacerbate this trend since models can reach 10's of megabytes in size. So we decided to invest in model compression. Specifically, we are experimenting with a feature that allows you to upload a full TensorFlow model, along with training data, and receive in return a compressed TensorFlow Lite model. The technology behind this is evolving rapidly and so we are looking for a few developers to try it and give us feedback. If you are interested, please sign up here.

Since ML Kit is available through Firebase, it's easy for you to take advantage of the broader Firebase platform. For example, Remote Config and A/B testing lets you experiment with multiple custom models. You can dynamically switch values in your app, making it a great fit to swap the custom models you want your users to use on the fly. You can even create population segments and experiment with several models in parallel.

Other examples include:

We can't wait to see what you'll build with ML Kit. We hope you'll love the product like many of our early customers:

Get started with the ML Kit beta by visiting your Firebase console today. If you have any thoughts or feedback, feel free to let us know - we're always listening!

We launched the Google URL Shortener back in 2009 as a way to help people more easily share links and measure traffic online. Since then, many popular URL shortening services have emerged and the ways people find content on the Internet have also changed dramatically, from primarily desktop webpages to apps, mobile devices, home assistants, and more.

To refocus our efforts, we're turning down support for goo.gl over the coming weeks and replacing it with Firebase Dynamic Links (FDL). FDLs are smart URLs that allow you to send existing and potential users to any location within an iOS, Android or web app. We're excited to grow and improve the product going forward. While most features of goo.gl will eventually sunset, all existing links will continue to redirect to the intended destination.

Starting April 13, 2018, anonymous users and users who have never created short links before today will not be able to create new short links via the goo.gl console. If you are looking to create new short links, we recommend you check out popular services like Bitly and Ow.ly as an alternative.

If you have existing goo.gl short links, you can continue to use all features of goo.gl console for a period of one year, until March 30, 2019, when we will discontinue the console. You can manage all your short links and their analytics through the goo.gl console during this period.

After March 30, 2019, all links will continue to redirect to the intended destination. Your existing short links will not be migrated to the Firebase console, however, you will be able to export your link information from the goo.gl console.

Starting May 30, 2018, only projects that have accessed URL Shortener APIs before today can create short links. To create new short links, we recommend FDL APIs. FDL short links will automatically detect the user's platform and send the user to either the web or your app, as appropriate.

If you are already calling URL Shortener APIs to manage goo.gl short links, you can continue to use them for a period of one year, until March 30, 2019, when we will discontinue the APIs. For developers looking to migrate to FDL see our migration guide.

As it is for consumers, all links will continue to redirect to the intended destination after March 30, 2019. However, existing short links will not be migrated to the Firebase console/API.

URL Shortener has been a great tool that we're proud to have built. As we look towards the future, we're excited about the possibilities of Firebase Dynamic Links, particularly when it comes to dynamic platform detection and links that survive the app installation process. We hope you are too!

Posted by Michael Hermanto, Software Engineer, Firebase

We launched the Google URL Shortener back in 2009 as a way to help people more easily share links and measure traffic online. Since then, many popular URL shortening services have emerged and the ways people find content on the Internet have also changed dramatically, from primarily desktop webpages to apps, mobile devices, home assistants, and more.

To refocus our efforts, we're turning down support for goo.gl over the coming weeks and replacing it with Firebase Dynamic Links (FDL). FDLs are smart URLs that allow you to send existing and potential users to any location within an iOS, Android or web app. We're excited to grow and improve the product going forward. While most features of goo.gl will eventually sunset, all existing links will continue to redirect to the intended destination.

Starting April 13, 2018, anonymous users and users who have never created short links before today will not be able to create new short links via the goo.gl console. If you are looking to create new short links, we recommend you check out popular services like Bitly and Ow.ly as an alternative.

If you have existing goo.gl short links, you can continue to use all features of goo.gl console for a period of one year, until March 30, 2019, when we will discontinue the console. You can manage all your short links and their analytics through the goo.gl console during this period.

After March 30, 2019, all links will continue to redirect to the intended destination. Your existing short links will not be migrated to the Firebase console, however, you will be able to export your link information from the goo.gl console.

Starting May 30, 2018, only projects that have accessed URL Shortener APIs before today can create short links. To create new short links, we recommend FDL APIs. FDL short links will automatically detect the user's platform and send the user to either the web or your app, as appropriate.

If you are already calling URL Shortener APIs to manage goo.gl short links, you can continue to use them for a period of one year, until March 30, 2019, when we will discontinue the APIs. For developers looking to migrate to FDL see our migration guide.

As it is for consumers, all links will continue to redirect to the intended destination after March 30, 2019. However, existing short links will not be migrated to the Firebase console/API.

URL Shortener has been a great tool that we're proud to have built. As we look towards the future, we're excited about the possibilities of Firebase Dynamic Links, particularly when it comes to dynamic platform detection and links that survive the app installation process. We hope you are too!

Back in October, we were thrilled to launch a beta version of Firebase Crashlytics. As the top ranked mobile app crash reporter for over 3 years running, Crashlytics helps you track, prioritize, and fix stability issues in realtime. It's been exciting to see all the positive reactions, as thousands of you have upgraded to Crashlytics in Firebase!

Today, we're graduating Firebase Crashlytics out of beta. As the default crash reporter for Firebase going forward, Crashlytics is the next evolution of the crash reporting capabilities of our platform. It empowers you to achieve everything you want to with Firebase Crash Reporting, plus much more.

This release include several major new features in addition to our stamp of approval when it comes to service reliability. Here's what's new.

We heard from many of you that you love Firebase Crash Reporting's "breadcrumbs" feature. (Breadcrumbs are the automatically created Analytics events that help you retrace user actions preceding a crash.) Starting today, you can see these breadcrumbs within the Crashlytics section of the Firebase console, helping you to triage issues more easily.

To use breadcrumbs on Crashlytics, install the latest SDK and enable Google Analytics for Firebase. If you already have Analytics enabled, the feature will automatically start working.

By broadly analyzing aggregated crash data for common trends, Crashlytics automatically highlights potential root causes and gives you additional context on the underlying problems. For example, it can reveal how widespread incorrect UIKit rendering was in your app so you would know to address that issue first. Crash insights allows you to make more informed decisions on what actions to take, save time on triaging issues, and maximize the impact of your debugging efforts.

From our community:

- Marc Bernstein, Software Development Team Lead, Hudl

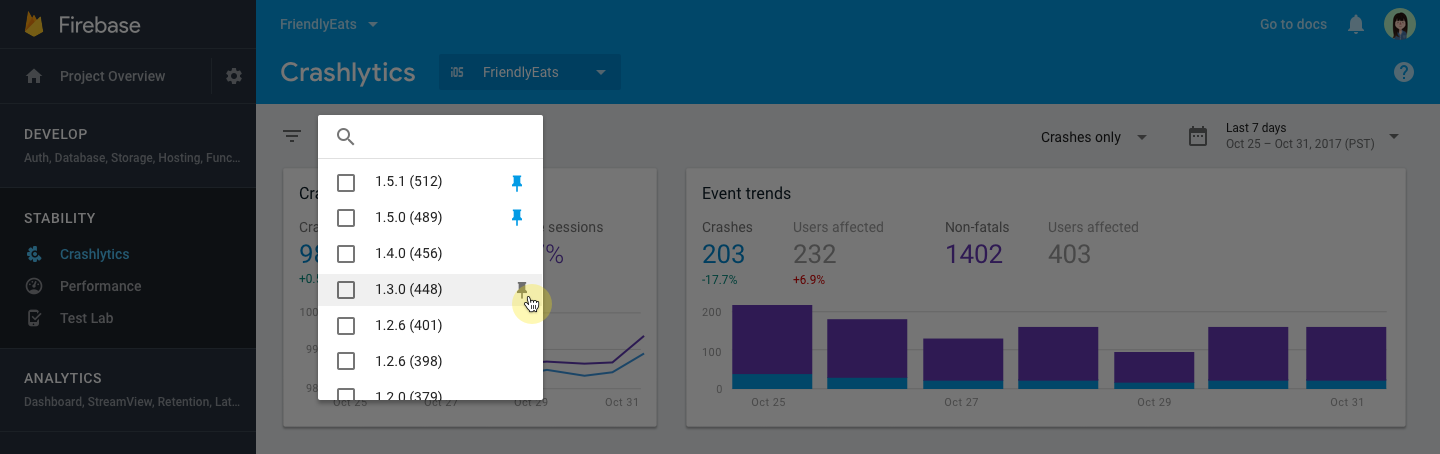

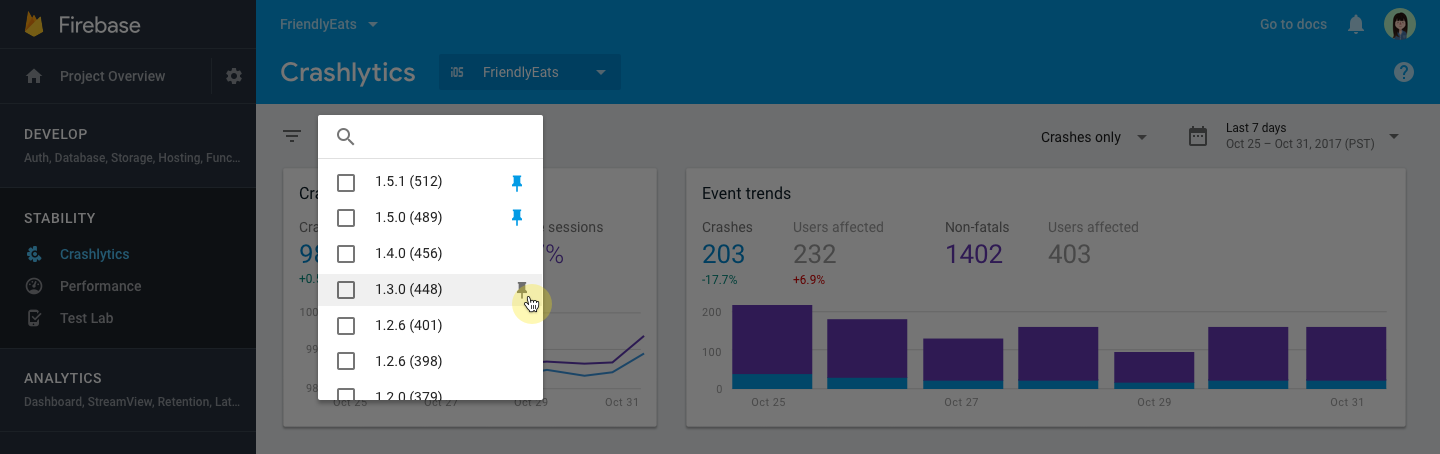

Generally, you have a few builds you care most about, while others aren't as important at the moment. With this new release of Crashlytics, you can now "pin" your most important builds which will appear at the top of the console. Your pinned builds will also appear on your teammates' consoles so it's easier to collaborate with them. This can be especially helpful when you have a large team with hundreds of builds and millions of users.

To show you stability issues, Crashlytics automatically uploads your dSYM files in the background to symbolicate your crashes. However, some complex situations can arise (i.e. Bitcode compiled apps) and prevent your dSYMs from being uploaded properly. That's why today we're also releasing a new dSYM uploader tool within your Crashlytics console. Now, you can manually upload your dSYM for cases where it cannot be automatically uploaded.

With today's GA release of Firebase Crashlytics, we've decided to sunset Firebase Crash Reporting, so we can best serve you by focusing our efforts on one crash reporter. Starting today, you'll notice the console has changed to only list Crashlytics in the navigation. If you need to access your existing crash data in Firebase Crash Reporting, you can use the app picker to switch from Crashlytics to Crash Reporting.

Firebase Crash Reporting will continue to be functional until September 8th, 2018 - at which point it will be retired fully.

Upgrading to Crashlytics is easy: just visit your project's console, choose Crashlytics in the left navigation and click "Set up Crashlytics":

If you're currently using both Firebase and Fabric, you can now link the two to see your existing crash data within the Firebase console. To get started, click "Link app in Fabric" within the console and go through the flow on fabric.io:

If you are only using Fabric right now, you don't need to take any action. We'll be building out a new flow in the coming months to help you seamlessly link your existing app(s) from Fabric to Firebase. In the meantime, we encourage you to try other Firebase products.

We are excited to bring you the best-in class crash reporter in the Firebase console. As always, let us know your thoughts and we look forward to continuing to improve Crashlytics. Happy debugging!

#crashImg {

width: 100%;

margin: 10px 0;

}

Originally posted on the Firebase Blog by Jason St. Pierre, Product Manager.

Back in October, we were thrilled to launch a beta version of Firebase Crashlytics. As the top ranked mobile app crash reporter for over 3 years running, Crashlytics helps you track, prioritize, and fix stability issues in realtime. It's been exciting to see all the positive reactions, as thousands of you have upgraded to Crashlytics in Firebase!

Today, we're graduating Firebase Crashlytics out of beta. As the default crash reporter for Firebase going forward, Crashlytics is the next evolution of the crash reporting capabilities of our platform. It empowers you to achieve everything you want to with Firebase Crash Reporting, plus much more.

This release include several major new features in addition to our stamp of approval when it comes to service reliability. Here's what's new.

We heard from many of you that you love Firebase Crash Reporting's "breadcrumbs" feature. (Breadcrumbs are the automatically created Analytics events that help you retrace user actions preceding a crash.) Starting today, you can see these breadcrumbs within the Crashlytics section of the Firebase console, helping you to triage issues more easily.

To use breadcrumbs on Crashlytics, install the latest SDK and enable Google Analytics for Firebase. If you already have Analytics enabled, the feature will automatically start working.

By broadly analyzing aggregated crash data for common trends, Crashlytics automatically highlights potential root causes and gives you additional context on the underlying problems. For example, it can reveal how widespread incorrect UIKit rendering was in your app so you would know to address that issue first. Crash insights allows you to make more informed decisions on what actions to take, save time on triaging issues, and maximize the impact of your debugging efforts.

From our community:

- Marc Bernstein, Software Development Team Lead, Hudl

Generally, you have a few builds you care most about, while others aren't as important at the moment. With this new release of Crashlytics, you can now "pin" your most important builds which will appear at the top of the console. Your pinned builds will also appear on your teammates' consoles so it's easier to collaborate with them. This can be especially helpful when you have a large team with hundreds of builds and millions of users.

To show you stability issues, Crashlytics automatically uploads your dSYM files in the background to symbolicate your crashes. However, some complex situations can arise (i.e. Bitcode compiled apps) and prevent your dSYMs from being uploaded properly. That's why today we're also releasing a new dSYM uploader tool within your Crashlytics console. Now, you can manually upload your dSYM for cases where it cannot be automatically uploaded.

With today's GA release of Firebase Crashlytics, we've decided to sunset Firebase Crash Reporting, so we can best serve you by focusing our efforts on one crash reporter. Starting today, you'll notice the console has changed to only list Crashlytics in the navigation. If you need to access your existing crash data in Firebase Crash Reporting, you can use the app picker to switch from Crashlytics to Crash Reporting.

Firebase Crash Reporting will continue to be functional until September 8th, 2018 - at which point it will be retired fully .

Upgrading to Crashlytics is easy: just visit your project's console, choose Crashlytics in the left navigation and click "Set up Crashlytics":

If you're currently using both Firebase and Fabric, you can now link the two to see your existing crash data within the Firebase console. To get started, click "Link app in Fabric" within the console and go through the flow on fabric.io:

If you are only using Fabric right now, you don't need to take any action. We'll be building out a new flow in the coming months to help you seamlessly link your existing app(s) from Fabric to Firebase. In the meantime, we encourage you to try other Firebase products.

We are excited to bring you the best-in class crash reporter in the Firebase console. As always, let us know your thoughts and we look forward to continuing to improve Crashlytics. Happy debugging!

Today we're excited to launch Cloud Firestore, a fully-managed NoSQL document

database for mobile and web app development. It's designed to easily store and

sync app data at global scale, and it's now available in beta.

Key features of Cloud Firestore include:

And of course, we've aimed for the simplicity and ease-of-use that is always top

priority for Firebase, while still making sure that Cloud Firestore can scale to

power even the largest apps.

Managing app data is still hard; you have to scale servers, handle intermittent

connectivity, and deliver data with low latency.

We've optimized Cloud Firestore for app development, so you can focus on

delivering value to your users and shipping better apps, faster. Cloud

Firestore:

As you may have guessed from the name, Cloud Firestore was built in close

collaboration with the Google Cloud Platform team.

This means it's a fully managed product, built from the ground up to

automatically scale. Cloud Firestore is a multi-region replicated database that

ensures once data is committed, it's durable even in the face of unexpected

disasters. Not only that, but despite being a distributed database, it's also

strongly consistent, removing tricky edge cases to make building apps easier

regardless of scale.

It also means that delivering a great server-side experience for backend

developers is a top priority. We're launching SDKs for Java, Go, Python, and

Node.js today, with more languages coming in the future.

Over the last 3 years Firebase has grown to become Google's app development

platform; it now has 16 products to build and grow your app. If you've used

Firebase before, you know we already offer a database, the Firebase Realtime

Database, which helps with some of the challenges listed above.

The Firebase Realtime Database, with its client SDKs and real-time capabilities,

is all about making app development faster and easier. Since its launch, it has

been adopted by hundred of thousands of developers, and as its adoption grew, so

did usage patterns. Developers began using the Realtime Database for more

complex data and to build bigger apps, pushing the limits of the JSON data model

and the performance of the database at scale.

Cloud Firestore is inspired by

what developers love most about the Firebase Realtime Database while also

addressing its key limitations like data structuring, querying, and scaling.

So, if you're a Firebase Realtime Database user today, we think you'll love

Cloud Firestore. However, this does not mean that Cloud Firestore is a drop-in

replacement for the Firebase Realtime Database. For some use cases, it may make

sense to use the Realtime Database to optimize for cost and latency, and it's

also easy to use both databases together. You can read a more in-depth

comparison between the two databases here.

We're continuing development on both databases and they'll both be available in

our console and documentation.

Cloud Firestore enters public beta starting today. If you're comfortable using a

beta product you should give it a spin on your next project! Here are some of

the companies and startups who are already building with Cloud Firestore:

Get started by visiting the database tab in your Firebase

console. For more details, see the documentation, pricing, code

samples, performance

limitations during beta, and view our open source iOS and JavaScript SDKs on

GitHub.

We can't wait to see what you build and hear what you think of Cloud Firestore!

Today we're excited to launch Cloud Firestore, a fully-managed NoSQL document

database for mobile and web app development. It's designed to easily store and

sync app data at global scale, and it's now available in beta.

Key features of Cloud Firestore include:

And of course, we've aimed for the simplicity and ease-of-use that is always top

priority for Firebase, while still making sure that Cloud Firestore can scale to

power even the largest apps.

Managing app data is still hard; you have to scale servers, handle intermittent

connectivity, and deliver data with low latency.

We've optimized Cloud Firestore for app development, so you can focus on

delivering value to your users and shipping better apps, faster. Cloud

Firestore:

As you may have guessed from the name, Cloud Firestore was built in close

collaboration with the Google Cloud Platform team.

This means it's a fully managed product, built from the ground up to

automatically scale. Cloud Firestore is a multi-region replicated database that

ensures once data is committed, it's durable even in the face of unexpected

disasters. Not only that, but despite being a distributed database, it's also

strongly consistent, removing tricky edge cases to make building apps easier

regardless of scale.

It also means that delivering a great server-side experience for backend

developers is a top priority. We're launching SDKs for Java, Go, Python, and

Node.js today, with more languages coming in the future.

Over the last 3 years Firebase has grown to become Google's app development

platform; it now has 16 products to build and grow your app. If you've used

Firebase before, you know we already offer a database, the Firebase Realtime

Database, which helps with some of the challenges listed above.

The Firebase Realtime Database, with its client SDKs and real-time capabilities,

is all about making app development faster and easier. Since its launch, it has

been adopted by hundred of thousands of developers, and as its adoption grew, so

did usage patterns. Developers began using the Realtime Database for more

complex data and to build bigger apps, pushing the limits of the JSON data model

and the performance of the database at scale.

Cloud Firestore is inspired by

what developers love most about the Firebase Realtime Database while also

addressing its key limitations like data structuring, querying, and scaling.

So, if you're a Firebase Realtime Database user today, we think you'll love

Cloud Firestore. However, this does not mean that Cloud Firestore is a drop-in

replacement for the Firebase Realtime Database. For some use cases, it may make

sense to use the Realtime Database to optimize for cost and latency, and it's

also easy to use both databases together. You can read a more in-depth

comparison between the two databases here.

We're continuing development on both databases and they'll both be available in

our console and documentation.

Cloud Firestore enters public beta starting today. If you're comfortable using a

beta product you should give it a spin on your next project! Here are some of

the companies and startups who are already building with Cloud Firestore:

Get started by visiting the database tab in your Firebase

console. For more details, see the documentation, pricing, code

samples, performance

limitations during beta, and view our open source iOS and JavaScript SDKs on

GitHub.

We can't wait to see what you build and hear what you think of Cloud Firestore!

Whether it's opening night for a Broadway musical or launch day for your app,

both are thrilling times for everyone involved. Our agency, Posse, collaborated with Hamilton to design,

build, and launch the official Hamilton app... in only three short months.

We decided to use Firebase, Google's

mobile development platform, for our backend and infrastructure, while we used

Flutter, a new UI toolkit for iOS and Android,

for our front-end. In this post, we share how we did it.

We love to spend time designing beautiful UIs, testing new interactions, and

iterating with clients, and we don't want to be distracted by setting up and

maintaining servers. To stay focused on the app and our users, we implemented a

full serverless architecture and made heavy use of Firebase.

A key feature of the app is the ticket lottery, which offers fans a

chance to get tickets to the constantly sold-out Hamilton show. We used Cloud

Functions for Firebase, and a data flow architecture we learned

about at Google I/O, to coordinate the lottery workflow between the mobile

app, custom business logic, and partner services.

For example, when someone enters the lottery, the app first writes data to

specific nodes in Realtime Database and the database's security rules help to

ensure that the data is valid. The write triggers a Cloud Function,

which runs business logic and stores its result to a new node in the Realtime

Database. The newly written result data is then pushed automatically to the app.

Because of Hamilton's intense fan following, we wanted to make sure that app

users could get news the instant it was published. So we built a custom,

web-based Content Management System (CMS) for the Hamilton team that used

Firebase Realtime Database to store and retrieve data. The Realtime Database

eliminated the need for a "pull to refresh" feature of the app. When new content

is published via the CMS, the update is stored in Firebase Realtime Database and

every app user automatically sees the update. No refresh, reload, or

pull required!

Besides powering our lottery integration, Cloud Functions was also extremely

valuable in the creation of user profiles, sending push notifications, and our

#HamCam — a custom Hamilton selfie and photo-taking experience. Cloud Functions

resized the images, saved them in Cloud Storage, and then updated the database.

By taking care of the infrastructure work of storing and managing the photos,

Firebase freed us up to focus on making the camera fun and full of Hamilton

style.

With only three months to design and deliver the app, we knew we needed to

iterate quickly on the UX and UI. Flutter's hot reload development

cycle meant we could make a change in our UI code and, in about a second, see

the change reflected on our simulators and phones. No rebuilding, recompiling,

or multi-second pauses required! Even the state of the app was preserved between

hot reloads, making it very fast for us to iterate on the UI with our designers.

We used Flutter's reactive UI framework to implement Hamilton's iconic brand

with custom UI elements. Flutter's "everything is a widget" approach made it

easy for us to compose custom UIs from a rich set of building blocks provided by

the framework. And, because Flutter runs on both iOS and Android, we were able

to spend our time creating beautiful designs instead of porting the UI.

The FlutterFire

project helped us access Firebase Analytics, Firebase Authentication, and

Realtime Database from the app code. And because Flutter is open source, and

easy to extend, we even built a custom router library that helped us

organize the app's UI code.

We enjoyed building the Hamilton app (find it on the Play

Store or the App

Store) in a way that allowed us to focus on our users and experiment with

new app ideas and experiences. And based on our experience, we'd happily

recommend serverless architectures with Firebase and customized UI designs with

Flutter as powerful ways for you to save time building your app.

For us, we already have plans how to continue and develop Hamilton app in new

ways, and can't wait to release those soon!

If you want to learn more about Firebase or Flutter, we recommend the Firebase docs, the Firebase channel on YouTube,

and the Flutter website.

Whether it's opening night for a Broadway musical or launch day for your app,

both are thrilling times for everyone involved. Our agency, Posse, collaborated with Hamilton to design,

build, and launch the official Hamilton app... in only three short months.

We decided to use Firebase, Google's

mobile development platform, for our backend and infrastructure, while we used

Flutter, a new UI toolkit for iOS and Android,

for our front-end. In this post, we share how we did it.

We love to spend time designing beautiful UIs, testing new interactions, and

iterating with clients, and we don't want to be distracted by setting up and

maintaining servers. To stay focused on the app and our users, we implemented a

full serverless architecture and made heavy use of Firebase.

A key feature of the app is the ticket lottery, which offers fans a

chance to get tickets to the constantly sold-out Hamilton show. We used Cloud

Functions for Firebase, and a data flow architecture we learned

about at Google I/O, to coordinate the lottery workflow between the mobile

app, custom business logic, and partner services.

For example, when someone enters the lottery, the app first writes data to

specific nodes in Realtime Database and the database's security rules help to

ensure that the data is valid. The write triggers a Cloud Function,

which runs business logic and stores its result to a new node in the Realtime

Database. The newly written result data is then pushed automatically to the app.

Because of Hamilton's intense fan following, we wanted to make sure that app

users could get news the instant it was published. So we built a custom,

web-based Content Management System (CMS) for the Hamilton team that used

Firebase Realtime Database to store and retrieve data. The Realtime Database

eliminated the need for a "pull to refresh" feature of the app. When new content

is published via the CMS, the update is stored in Firebase Realtime Database and

every app user automatically sees the update. No refresh, reload, or

pull required!

Besides powering our lottery integration, Cloud Functions was also extremely

valuable in the creation of user profiles, sending push notifications, and our

#HamCam — a custom Hamilton selfie and photo-taking experience. Cloud Functions

resized the images, saved them in Cloud Storage, and then updated the database.

By taking care of the infrastructure work of storing and managing the photos,

Firebase freed us up to focus on making the camera fun and full of Hamilton

style.

With only three months to design and deliver the app, we knew we needed to

iterate quickly on the UX and UI. Flutter's hot reload development

cycle meant we could make a change in our UI code and, in about a second, see

the change reflected on our simulators and phones. No rebuilding, recompiling,

or multi-second pauses required! Even the state of the app was preserved between

hot reloads, making it very fast for us to iterate on the UI with our designers.

We used Flutter's reactive UI framework to implement Hamilton's iconic brand

with custom UI elements. Flutter's "everything is a widget" approach made it

easy for us to compose custom UIs from a rich set of building blocks provided by

the framework. And, because Flutter runs on both iOS and Android, we were able

to spend our time creating beautiful designs instead of porting the UI.

The FlutterFire

project helped us access Firebase Analytics, Firebase Authentication, and

Realtime Database from the app code. And because Flutter is open source, and

easy to extend, we even built a custom router library that helped us

organize the app's UI code.

We enjoyed building the Hamilton app (find it on the Play

Store or the App

Store) in a way that allowed us to focus on our users and experiment with

new app ideas and experiences. And based on our experience, we'd happily

recommend serverless architectures with Firebase and customized UI designs with

Flutter as powerful ways for you to save time building your app.

For us, we already have plans how to continue and develop Hamilton app in new

ways, and can't wait to release those soon!

If you want to learn more about Firebase or Flutter, we recommend the Firebase docs, the Firebase channel on YouTube,

and the Flutter website.

It's been an exciting year! Last May, we expanded Firebase into

our unified app platform, building on the original backend-as-a-service and

adding products to help developers grow their user base, as well as test and

monetize their apps. Hearing from developers like Wattpad, who built an app

using Firebase in only 3 weeks, makes all the hard work worthwhile.

We're thrilled by the initial response from the community, but we believe our

journey is just getting started. Let's talk about some of the enhancements

coming to Firebase today.

In January, we

announced that we were welcoming the Fabric team to Firebase. Fabric initially

grabbed our attention with their array of products, including the

industry-leading crash reporting tool, Crashlytics. As we got to know the team

better, we were even more impressed by how closely aligned our missions are: to

help developers build better apps and grow successful businesses. Over the last

several months, we've been working closely with the Fabric team to bring

the best of our platforms together.

We plan to make Crashlytics the primary crash reporting product in Firebase. If

you don't already use a crash reporting tool, we recommend you take a look at

Crashlytics and see what it can do for you. You can get started by following the Fabric

documentation.

Phone number authentication has been the biggest request for Firebase

Authentication, so we're excited to announce that we've worked with the Fabric

Digits team to bring phone auth to our platform. You can now let your users sign

in with their phone numbers, in addition to traditional email/password or

identity providers like Google or Facebook. This gives you a comprehensive

authentication solution no matter who your users are or how they like to log in.

At the same time, the Fabric team will be retiring the Digits name and SDK. If

you currently use Digits, over the next couple weeks we'll be rolling out the

ability to link your existing Digits account with Firebase and swap in the

Firebase SDK for the Digits SDK. Go to the Digits

blog to learn more.

/>

We recognize that poor app performance and stability are the top reasons for

users to leave bad ratings on your app and possibly churn altogether. As part of

our effort to help you build better apps, we're pleased to announce the beta

launch of Performance Monitoring.

Firebase Performance Monitoring is a new free tool that helps you understand

when your user experience is being impacted by poorly performing code or

challenging network conditions. You can learn more and get started with

Performance Monitoring in the Firebase

documentation.

Analytics has been core to the Firebase platform since we launched last I/O. We

know that understanding your users is the number one way to make your app

successful, so we're continuing to invest in improving our analytics product.

First off, you may notice that you're starting to see the name "Google Analytics

for Firebase" around our documentation. Our analytics solution was built in

conjunction with the Google Analytics team, and the reports are available both

in the Firebase console and the Google Analytics interface. So, we're renaming

Firebase Analytics to Google Analytics for Firebase, to reflect that your app

analytics data are shared across both.

For those of you who monetize your app with AdMob, we've started sharing data between

the two platforms, helping you understand the true lifetime value (LTV) of

your users, from both purchases and AdMob revenue. You'll see these new insights

surfaced in the updated Analytics dashboard.

Many of you have also asked for analytics insights into custom events and

parameters. Starting today, you can register up to 50 custom event parameters

and see their details in your Analytics reports. Learn more about

custom parameter reporting.

Firebase's mission is to help all developers build better apps. In that spirit,

today we're announcing expanded platform and vertical support for Firebase.

First of all, as Swift has become the preferred language for many iOS

developers, we've updated our SDK to handle Swift language nuances, making Swift

development a native experience on Firebase.

We've also improved Firebase Cloud Messaging by adding support for token-based

authentication for APNs, and greatly simplifying the connection and registration

logic in the client SDK.

Second, we've heard from our game developer community that one of the most

important stats you monitor is frames per second (FPS). So, we've built Game

Loop support & FPS monitoring into Test Lab for Android, allowing you to

evaluate your game's frame rate before you deploy. Coupled with the addition of

Unity plugins and a C++ SDK, which we

announced at GDC this year, we think that Firebase is a great option for

game developers. To see an example of a game built on top of Firebase, check out

our Mecha Hamster app on

Github.

Finally, we've taken a big first step towards open sourcing our SDKs. We believe

in open source software, not only because transparency is an important goal, but

also because we know that the greatest innovation happens when we all

collaborate. You can view our new repos on our open sourceproject page and learn more about our decision in this

blog post.

In March, we

launched Cloud Functions for Firebase, which lets you run custom backend

code in response to events triggered by Firebase features and HTTP requests.

This lets you do things like send a notification when a user signs up or

automatically create thumbnails when an image is uploaded to Cloud Storage.

Today, in an effort to better serve our web developer community, we're expanding

Firebase Hosting to integrate with Cloud Functions. This means that, in addition

to serving static assets for your web app, you can now serve dynamic content,

generated by Cloud Functions, through Firebase Hosting. For those of you

building progressive web

apps, Firebase Hosting + Cloud Functions allows you to go completely

server-less. You can learn more by visiting our

documentation.

Our goal is to build the best developer experience: easy-to-use products, great

documentation, and intuitive APIs. And the best resource that we have for

improving Firebase is you! Your questions and feedback continuously push us to

make Firebase better.

In light of that, we're excited to announce a Firebase Alpha program, where you

will have the opportunity to test the cutting edge of our products. Things might

not be perfect (in fact, we can almost guarantee they won't be), but by

participating in the alpha community, you'll help define the future of Firebase.

If you want to get involved, please register your interest in the Firebase Alpha form.

Thank you for your support, enthusiasm, and, most importantly, feedback. The

Firebase community is the reason that we've been able to grow and improve our

platform at such an incredible pace over the last year. We're excited to

continue working with you to build simple, intuitive products for developing

apps and growing mobile businesses. To get started with Firebase today, visit

our newly redesigned website. We're

excited to see what you build!

Originally posted on the Firebase Blog by Francis Ma, Firebase Group Product Manager

It's been an exciting year! Last May, we expanded Firebase into

our unified app platform, building on the original backend-as-a-service and

adding products to help developers grow their user base, as well as test and

monetize their apps. Hearing from developers like Wattpad, who built an app

using Firebase in only 3 weeks, makes all the hard work worthwhile.

We're thrilled by the initial response from the community, but we believe our

journey is just getting started. Let's talk about some of the enhancements

coming to Firebase today.

In January, we

announced that we were welcoming the Fabric team to Firebase. Fabric initially

grabbed our attention with their array of products, including the

industry-leading crash reporting tool, Crashlytics. As we got to know the team

better, we were even more impressed by how closely aligned our missions are: to

help developers build better apps and grow successful businesses. Over the last

several months, we've been working closely with the Fabric team to bring

the best of our platforms together.

We plan to make Crashlytics the primary crash reporting product in Firebase. If

you don't already use a crash reporting tool, we recommend you take a look at

Crashlytics and see what it can do for you. You can get started by following the Fabric

documentation.

Phone number authentication has been the biggest request for Firebase

Authentication, so we're excited to announce that we've worked with the Fabric

Digits team to bring phone auth to our platform. You can now let your users sign

in with their phone numbers, in addition to traditional email/password or

identity providers like Google or Facebook. This gives you a comprehensive

authentication solution no matter who your users are or how they like to log in.

At the same time, the Fabric team will be retiring the Digits name and SDK. If

you currently use Digits, over the next couple weeks we'll be rolling out the

ability to link your existing Digits account with Firebase and swap in the

Firebase SDK for the Digits SDK. Go to the Digits

blog to learn more.

We recognize that poor app performance and stability are the top reasons for

users to leave bad ratings on your app and possibly churn altogether. As part of

our effort to help you build better apps, we're pleased to announce the beta

launch of Performance Monitoring.

Firebase Performance Monitoring is a new free tool that helps you understand

when your user experience is being impacted by poorly performing code or

challenging network conditions. You can learn more and get started with

Performance Monitoring in the Firebase

documentation.

Analytics has been core to the Firebase platform since we launched last I/O. We

know that understanding your users is the number one way to make your app

successful, so we're continuing to invest in improving our analytics product.

First off, you may notice that you're starting to see the name "Google Analytics

for Firebase" around our documentation. Our analytics solution was built in

conjunction with the Google Analytics team, and the reports are available both

in the Firebase console and the Google Analytics interface. So, we're renaming

Firebase Analytics to Google Analytics for Firebase, to reflect that your app

analytics data are shared across both.

For those of you who monetize your app with AdMob, we've started sharing data between

the two platforms, helping you understand the true lifetime value (LTV) of

your users, from both purchases and AdMob revenue. You'll see these new insights

surfaced in the updated Analytics dashboard.

Many of you have also asked for analytics insights into custom events and

parameters. Starting today, you can register up to 50 custom event parameters

and see their details in your Analytics reports. Learn more about

custom parameter reporting.

Firebase's mission is to help all developers build better apps. In that spirit,

today we're announcing expanded platform and vertical support for Firebase.

First of all, as Swift has become the preferred language for many iOS

developers, we've updated our SDK to handle Swift language nuances, making Swift

development a native experience on Firebase.

We've also improved Firebase Cloud Messaging by adding support for token-based

authentication for APNs, and greatly simplifying the connection and registration

logic in the client SDK.

Second, we've heard from our game developer community that one of the most

important stats you monitor is frames per second (FPS). So, we've built Game

Loop support & FPS monitoring into Test Lab for Android, allowing you to

evaluate your game's frame rate before you deploy. Coupled with the addition of

Unity plugins and a C++ SDK, which we

announced at GDC this year, we think that Firebase is a great option for

game developers. To see an example of a game built on top of Firebase, check out

our Mecha Hamster app on

Github.

Finally, we've taken a big first step towards open sourcing our SDKs. We believe

in open source software, not only because transparency is an important goal, but

also because we know that the greatest innovation happens when we all

collaborate. You can view our new repos on our open sourceproject page and learn more about our decision in this

blog post.

In March, we

launched Cloud Functions for Firebase, which lets you run custom backend

code in response to events triggered by Firebase features and HTTP requests.

This lets you do things like send a notification when a user signs up or

automatically create thumbnails when an image is uploaded to Cloud Storage.

Today, in an effort to better serve our web developer community, we're expanding

Firebase Hosting to integrate with Cloud Functions. This means that, in addition

to serving static assets for your web app, you can now serve dynamic content,

generated by Cloud Functions, through Firebase Hosting. For those of you

building progressive web

apps, Firebase Hosting + Cloud Functions allows you to go completely

server-less. You can learn more by visiting our

documentation.

Our goal is to build the best developer experience: easy-to-use products, great

documentation, and intuitive APIs. And the best resource that we have for

improving Firebase is you! Your questions and feedback continuously push us to

make Firebase better.

In light of that, we're excited to announce a Firebase Alpha program, where you

will have the opportunity to test the cutting edge of our products. Things might

not be perfect (in fact, we can almost guarantee they won't be), but by

participating in the alpha community, you'll help define the future of Firebase.

If you want to get involved, please register your interest in the Firebase Alpha form.

Thank you for your support, enthusiasm, and, most importantly, feedback. The

Firebase community is the reason that we've been able to grow and improve our

platform at such an incredible pace over the last year. We're excited to

continue working with you to build simple, intuitive products for developing

apps and growing mobile businesses. To get started with Firebase today, visit

our newly redesigned website. We're

excited to see what you build!

In September, we launched a

new way to search for content in apps on Android phones. With this update,

users were able to find personal content like messages, notes, music and more

across apps like OpenTable, Ticketmaster, Evernote, Glide, Asana, Gmail, and

Google Keep from a single search box. Today, we're inviting all Android

developers to enable this functionality for their apps.

Starting with version 10.0, the Firebase App Indexing API on Android lets apps

add their content to Google's on-device index in the background, and update it

in real-time as users make changes in the app. We've designed the API with three

principles in mind:

There are several predefined data types that make it easy to represent common

things such as messages, notes, and songs, or you can add custom types to

represent additional items. Plus, logging user actions like a user listening to

a specific song provides an important signal to help rank user content across

the Google app.

Indexable note = Indexables.noteDigitalDocumentBuilder()

.setUrl("http://example.net/users/42/lists/23")

.setName("Shopping list")

.setText("steak, pasta, wine")

.setImage("http://example.net/images/shopping.jpg")

.build();

FirebaseAppIndex.getInstance().update(note);

Example of adding or updating a user's shopping list in the on-device index.

Integrating with Firebase App Indexing helps increase user engagement with your

app, as users can get back to their personal content in an instant with Google

Search. Because that data is indexed directly on the device, this even works

when offline.

To get started, check out our implementation guide

and codelab.

In September, we launched a

new way to search for content in apps on Android phones. With this update,

users were able to find personal content like messages, notes, music and more

across apps like OpenTable, Ticketmaster, Evernote, Glide, Asana, Gmail, and

Google Keep from a single search box. Today, we're inviting all Android

developers to enable this functionality for their apps.

Starting with version 10.0, the Firebase App Indexing API on Android lets apps

add their content to Google's on-device index in the background, and update it

in real-time as users make changes in the app. We've designed the API with three

principles in mind:

There are several predefined data types that make it easy to represent common

things such as messages, notes, and songs, or you can add custom types to

represent additional items. Plus, logging user actions like a user listening to

a specific song provides an important signal to help rank user content across

the Google app.

Indexable note = Indexables.noteDigitalDocumentBuilder()

.setUrl("http://example.net/users/42/lists/23")

.setName("Shopping list")

.setText("steak, pasta, wine")

.setImage("http://example.net/images/shopping.jpg")

.build();

FirebaseAppIndex.getInstance().update(note);

Example of adding or updating a user's shopping list in the on-device index.

Integrating with Firebase App Indexing helps increase user engagement with your

app, as users can get back to their personal content in an instant with Google

Search. Because that data is indexed directly on the device, this even works

when offline.

To get started, check out our implementation guide

and codelab.

SELECT

user_dim.app_info.app_instance_id,

user_dim.device_info.device_category,

user_dim.device_info.user_default_language,

user_dim.device_info.platform_version,

user_dim.device_info.device_model,

user_dim.geo_info.country,

user_dim.geo_info.city,

user_dim.app_info.app_version,

user_dim.app_info.app_store,

user_dim.app_info.app_platform

FROM

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

SELECT

user_dim.geo_info.country as country,

EXACT_COUNT_DISTINCT( user_dim.app_info.app_instance_id ) as users

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601],

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

GROUP BY

country

ORDER BY

users DESC

SELECT

user_dim.user_properties.value.value.string_value as language_code,

EXACT_COUNT_DISTINCT(user_dim.app_info.app_instance_id) as users,

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601],

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

WHERE

user_dim.user_properties.key = "language"

GROUP BY

language_code

ORDER BY

users DESC

SELECT

event_dim.name,

COUNT(event_dim.name) as event_count

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601]

GROUP BY

event_dim.name

ORDER BY

event_count DESC

SELECT

event_dim.params.value.int_value as virtual_currency_amt,

COUNT(*) as num_times_spent

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601]

WHERE

event_dim.name = "spend_virtual_currency"

AND

event_dim.params.key = "value"

GROUP BY

1

ORDER BY

num_times_spent DESC

SELECT

user_dim.geo_info.city,

COUNT(user_dim.geo_info.city) as city_count

FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

GROUP BY

user_dim.geo_info.city

ORDER BY

city_count DESC

SELECT

user_dim.app_info.app_platform as appPlatform,

user_dim.device_info.device_category as deviceType,

COUNT(user_dim.device_info.device_category) AS device_type_count FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

GROUP BY

1,2

ORDER BY

device_type_count DESC

SELECT

STRFTIME_UTC_USEC(eventTime,"%Y%m%d") as date,

appPlatform,

eventName,

COUNT(*) totalEvents,

EXACT_COUNT_DISTINCT(IF(userId IS NOT NULL, userId, fullVisitorid)) as users

FROM (

SELECT

fullVisitorid,

openTimestamp,

FORMAT_UTC_USEC(openTimestamp) firstOpenedTime,

userIdSet,

MAX(userIdSet) OVER(PARTITION BY fullVisitorid) userId,

appPlatform,

eventTimestamp,

FORMAT_UTC_USEC(eventTimestamp) as eventTime,

eventName

FROM FLATTEN(

(

SELECT

user_dim.app_info.app_instance_id as fullVisitorid,

user_dim.first_open_timestamp_micros as openTimestamp,

user_dim.user_properties.value.value.string_value,

IF(user_dim.user_properties.key = 'user_id',user_dim.user_properties.value.value.string_value, null) as userIdSet,

user_dim.app_info.app_platform as appPlatform,

event_dim.timestamp_micros as eventTimestamp,

event_dim.name AS eventName,

event_dim.params.key,

event_dim.params.value.string_value

FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

), user_dim.user_properties)

)

GROUP BY

date, appPlatform, eventName

SELECT

user_dim.app_info.app_instance_id,

user_dim.device_info.device_category,

user_dim.device_info.user_default_language,

user_dim.device_info.platform_version,

user_dim.device_info.device_model,

user_dim.geo_info.country,

user_dim.geo_info.city,

user_dim.app_info.app_version,

user_dim.app_info.app_store,

user_dim.app_info.app_platform

FROM

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

SELECT

user_dim.geo_info.country as country,

EXACT_COUNT_DISTINCT( user_dim.app_info.app_instance_id ) as users

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601],

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

GROUP BY

country

ORDER BY

users DESC

SELECT

user_dim.user_properties.value.value.string_value as language_code,

EXACT_COUNT_DISTINCT(user_dim.app_info.app_instance_id) as users,

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601],

[firebase-analytics-sample-data:ios_dataset.app_events_20160601]

WHERE

user_dim.user_properties.key = "language"

GROUP BY

language_code

ORDER BY

users DESC

SELECT

event_dim.name,

COUNT(event_dim.name) as event_count

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601]

GROUP BY

event_dim.name

ORDER BY

event_count DESC

SELECT

event_dim.params.value.int_value as virtual_currency_amt,

COUNT(*) as num_times_spent

FROM

[firebase-analytics-sample-data:android_dataset.app_events_20160601]

WHERE

event_dim.name = "spend_virtual_currency"

AND

event_dim.params.key = "value"

GROUP BY

1

ORDER BY

num_times_spent DESC

SELECT

user_dim.geo_info.city,

COUNT(user_dim.geo_info.city) as city_count

FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

GROUP BY

user_dim.geo_info.city

ORDER BY

city_count DESC

SELECT

user_dim.app_info.app_platform as appPlatform,

user_dim.device_info.device_category as deviceType,

COUNT(user_dim.device_info.device_category) AS device_type_count FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

GROUP BY

1,2

ORDER BY

device_type_count DESC

SELECT

STRFTIME_UTC_USEC(eventTime,"%Y%m%d") as date,

appPlatform,

eventName,

COUNT(*) totalEvents,

EXACT_COUNT_DISTINCT(IF(userId IS NOT NULL, userId, fullVisitorid)) as users

FROM (

SELECT

fullVisitorid,

openTimestamp,

FORMAT_UTC_USEC(openTimestamp) firstOpenedTime,

userIdSet,

MAX(userIdSet) OVER(PARTITION BY fullVisitorid) userId,

appPlatform,

eventTimestamp,

FORMAT_UTC_USEC(eventTimestamp) as eventTime,

eventName

FROM FLATTEN(

(

SELECT

user_dim.app_info.app_instance_id as fullVisitorid,

user_dim.first_open_timestamp_micros as openTimestamp,

user_dim.user_properties.value.value.string_value,

IF(user_dim.user_properties.key = 'user_id',user_dim.user_properties.value.value.string_value, null) as userIdSet,

user_dim.app_info.app_platform as appPlatform,

event_dim.timestamp_micros as eventTimestamp,

event_dim.name AS eventName,

event_dim.params.key,

event_dim.params.value.string_value

FROM

TABLE_DATE_RANGE([firebase-analytics-sample-data:android_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP()),

TABLE_DATE_RANGE([firebase-analytics-sample-data:ios_dataset.app_events_], DATE_ADD('2016-06-07', -7, 'DAY'), CURRENT_TIMESTAMP())

), user_dim.user_properties)

)

GROUP BY

date, appPlatform, eventName

Originally posted on Firebase blog

Posted by Laurence Moroney, Developer Advocate and Alfonso Gómez Jordana, Associate Product Manager

For most developers, building an authentication system for your app can feel a lot like paying taxes. They are both relatively hard to understand tasks that you have no choice but doing, and could have big consequences if you get them wrong. No one ever started a company to pay taxes and no one ever built an app just so they could create a great login system. They just seem to be inescapable costs.

But now, you can at least free yourself from the auth tax. With Firebase Authentication, you can outsource your entire authentication system to Firebase so that you can concentrate on building great features for your app. Firebase Authentication makes it easier to get your users signed-in without having to understand the complexities behind implementing your own authentication system. It offers a straightforward getting started experience, optional UX components designed to minimize user friction, and is built on open standards and backed by Google infrastructure.

Implementing Firebase Authentication is relatively fast and easy. From the Firebase console, just choose from the popular login methods that you want to offer (like Facebook, Google, Twitter and email/password) and then add the Firebase SDK to your app. Your app will then be able to connect securely with the real time database, Firebase storage or to your own custom back end. If you have an auth system already, you can use Firebase Authentication as a bridge to other Firebase features.

Firebase Authentication also includes an open source UI library that streamlines building the many auth flows required to give your users a good experience. Password resets, account linking, and login hints that reduce the cognitive load around multiple login choices - they are all pre-built with Firebase Authentication UI. These flows are based on years of UX research optimizing the sign-in and sign-up journeys on Google, Youtube and Android. It includes Smart Lock for Passwords on Android, which has led to significant improvements in sign-in conversion for many apps. And because Firebase UI is open source, the interface is fully customizable so it feels like a completely natural part of your app. If you prefer, you are also free to create your own UI from scratch using our client APIs.

And Firebase Authentication is built around openness and security. It leverages OAuth 2.0 and OpenID Connect, industry standards designed for security, interoperability, and portability. Members of the Firebase Authentication team helped design these protocols and used their expertise to weave in latest security practices like ID tokens, revocable sessions, and native app anti-spoofing measures to make your app easier to use and avoid many common security problems. And code is independently reviewed by the Google Security team and the service is protected in Google’s infrastructure.